Overview

Conclusion:The world will be science fiction from the 2030s.

※The assertions in this article contain a great deal of personal feeling, and I hope that you will understand this premise before reading on.

The conclusions are as follows.

- High likelihood of the rise of “instruction-waiting human-level” or cat-level artificial intelligence (Generalized Intelligence) from the late 2020s (around 2026).

- Generalized Intelligence will be rapidly implemented in society, transforming all aspects of society by 2035.

- Generalized Artificial Intelligence and Super Intelligence with moderate potential will be realized within 20 years.

- The world will become Science Fiction (SF) in the 2030s

I believe the above can be extrapolated from the latest AI technology developments, reports, online forecasting sites, professional surveys, etc. on a specific chronological basis.

Many people think of AI as of 2022 as just being able to recognize/generate images a bit or make odd chit-chat in one language. It may seem impossible that the world will be science fiction when society is not even digitized yet.

However, it is almost certain from various estimates that AI technology will rapidly develop from around 2026 to the 2030s, transforming all areas of society.

To use an analogy, it will be as if the world will change in just 10 years from a postwar world without the Internet to the age of Youtube.

The “world will become science fiction” here is a metaphor for a situation in which general-purpose and practical AI is widespread in society, for example, where each person has an AI assistant, general-purpose robots are roaming the streets, stores, factories, etc., and personal robots like Tachikoma from “Ghost in the Shell” exist. Since there are countless themes in science fiction, I hope you can imagine a society in which various areas are automated by AI.

In this article, I would like to explain what I imagine that will happen, when it will happen, and why we can say it will happen.

Imagine the future with clarity

This article will be structured as follows.

- Imagining the future that will be caused mainly by generalized intelligence within the next 10 years → First, we will introduce the image of the future that will be realized by AI through videos and other means.

- After that, we will introduce reports, surveys, and online prediction sites that provide evidence of when various types of AI (generalized intelligence, general artificial intelligence, and super intelligence) will be realized.

- Finally, I will present a discussion of the social impact from both economic and political perspectives.

In Japan (my country of origin), there are few attempts to extrapolate the future on a specific chronological basis regarding advanced technology, but there are many such estimates outside of Japan, so I will summarize them and add my personal feeling as well.

Although the future is strictly unpredictable, it seems possible to imagine what will happen with some probability range from what is currently known.

In this article I will not explain current AI technology, nor will I expand on my imagination of what could happen in the future (both are insanely important).

It will attempt to imagine the future on a “concrete chronological basis”, with reasonable inferences focusing primarily on the AI technologies that have been accelerating in recent years.

Imagining and predicting the future based on a concrete chronology may remind one of 20th century futurology, and may be viewed critically from all sides. However, I am motivated to share what is being considered in various places today.

My personal character is optimistic about technology, but I would like to think about it with a minimum of hypotheses and reasoning.

In conclusion, I would like to summarize the phased evolution of AI by chronological order. The rationale will be explained later.

- ① ANI (Artificial Narrow Intelligence) – by 2025

- ②GI (Generalized Intelligence), human waiting for instructions or cat level intelligence – realized around 2026-2030

- ③AGI (Artificial General Intelligence) – realized around 2035~2040

- ④ASI (Artificial Super Intelligence), realized around 2038~2045

- Here NAI is an AI that performs certain tasks. Image generation AI is included here.

- Generalized intelligence is AI that is more versatile and practical there than narrow AI (Stability AI CIO Daniel Jeffries coined the term, I believe. No active, instruction-waiting human-level or cat-level intelligence: The Coming Age of Generalized AI In this article I break down the most cutting edge, practical medium.com )

- AGI is an intelligence that can perform almost any task that the average human can perform, and also has an inquisitive, curious, and active mind about the world.

- Superintelligence is an intelligence that is much smarter than the best human brains in virtually every field (including scientific creativity, general knowledge, and social skills).

- (*I’m sure there are other definitions of each of the above AI terms, but please take this as a rough discussion.)

Personally, I believe that generalized intelligence will almost certainly be realized, taking into account a variety of information.

As for AGI and superintelligence, online prediction sites and reports indicate that there is a reasonable chance that they will be realized within 20~30 years (by 2040~2050).

Personally, I think it will be realized within 15~20 years (2035~2040).

(*The transition from AGI to super intelligence is not likely to happen in the span of a few decades, but in the span of a few years. Therefore, the gap between the two realization dates will be several years. The reasons are discussed in the chapter on superintelligence.)

Images of Future AI Technology

In this chapter, I would like to give a concrete image of how society will change in the 2030s if generalized intelligence is realized. I will explain in the following order.

- Software UI becomes natural language

- Robots have versatility and utility

- Coding AI

- Evolution of Metaverse AI

- Self-driving cars

- AI accelerates science and technology

- Future Image Summary

Software UI becomes natural language

As CEO Runway mentions above, natural language processing and multimodal AI will evolve to perform various tasks while interacting directly with software.

We will probably begin to see and feel this in society after 2026, when generalized intelligence will begin to emerge.

We are already starting to see AI that uses natural language processing to analyze the following searches and display valid web pages.

In addition, the web interface of the future may look like the following. This is an image of an AI that interactively and clearly changes the visual structure of the web to teach you the information you want to know in natural language.

Web interfaces today are static and can only be presented in a coherent way, but starting in the late 2020s, they will be able to display information in a custom, individualized way. It is expected to become a real-time interaction with AI in all media, including books, movies, and games.

In addition, I can imagine that the reception desk of all stores and department stores will be replaced by AI that combines natural language processing, vision, and hearing in a multimodal way, as shown below, starting in 2026. In my opinion, AI will be widely used by 2035, about 10 years after 2026. This is truly a science fiction-like scene.

And as shown below, they will be able to have various chats with a personal assistant that is indistinguishable from a human (or even a character), enjoy traveling together, and perform human tasks in a secretarial manner. Roughly, we estimate that this too will be becoming widespread by the late 2020s and early 2030s.

Below is an image of AI assisting humans in their work. This too will become widespread starting in the late 2020s.

Robots have versatility and utility

The evolution of AI technology applied to robots is expected to make it possible for robots to perform flexible movements like the one in the video below in response to various environments by around 2026. This video was probably shot after many trials and errors in a well-developed environment, but I believe that robots will begin to be able to achieve this level of performance in a stable manner. It is also likely that they will be able to understand natural language to some extent and perform simple tasks.

Considering this, we feel that the development of Optimus, the humanoid robot that Tesla has declared to be developed in 2021, is also starting at a good time considering the future progress of AI.

In fact, Goldman Sachs estimates that by 2035, the humanoid robot market will be worth $154 billion, the same size as the EV market in 2021, according to an aggressive forecast (Blue sky scenario).

Considering that the above Goldman Sachs estimate is positive, we believe that humanoid robots will start to become versatile and practical around 2026, gradually penetrate society, and by 2035, the public will see them more frequently in various situations.

In addition, humanoid robots will inevitably begin to be applied to military technology, as shown in the following video (which I assume is computer graphics or something). Although no one seems to realize it now, there is a possibility that such humanoid military robots will begin to be used worldwide around the late 2020s. I am not familiar with military science, so I do not know how they will be used and regulated, but it will be technologically feasible. I can imagine a lot of ethical and social issues that would arise.

The following is a video that may help you visualize the industrial applications of general-purpose robots. General-purpose robot arms that can move freely in factories and kitchens and understand natural language will probably be put to practical use by the mid-2020s and spread rapidly throughout society. This is exactly the world view of Ghost in the Shell.

For a cuter example, we feel that personal robots such as the following will become even more practical in the home or hospitality industry as toys or assistants for children, and will start to become popular in the late 2020s.

Within a decade, robots will be active in game arcades and in the training of table tennis players, as shown below.

We believe that robots with realistic reproductions of human faces will be used to serve customers, as shown below. I believe that this will also become widespread starting in the late 2020s. My personal intuition tells me that by 2040, ultra-realistic humanoid robots will emerge that are completely indistinguishable from ordinary humans, unless they are dissected (advances in materials chemistry and soft robotics).

Coding AI

In the following forecast from Metaculus, the question “Will AI have the ability to do more than 10,000 lines of coding without error before 2030?” is answered “yes” with a 50% confidence level. Even with a little leeway, we believe that by 2035, it is highly likely that AI will exist and be released that can generate almost any kind of coding at any scale as long as the requirements are defined properly.

https://www.metaculus.com/questions/11188/ai-as-a-competent-programmer-before-2030/

In the OpenAI Codex demo below, when you ask CodeX to simulate a problem in natural language, the AI itself generates code while explaining it in natural language. If the AI makes a mistake in code generation, it corrects the code and solves the problem on its own.

We see the possibility of solving the problem of not knowing why the AI generated the code, since it can generate the code while interacting with it in natural language.

Based on the above current situation and the Metaculus forecast, I believe that, depending on the size of the source code, small-scale programming (a few dozen lines) will be feasible by 2025, medium-scale programming (several hundred to a thousand lines) by 2030, and large-scale programming (several tens of thousands of lines) by 2035 (around the time of the AGI realization forecast), as long as the requirements are given.

This is because we believe that by 2035, the estimated time of AGI realization, AI will almost certainly be able to perform tasks in the form of answering questions given to it.

I believe that by 2035, generalized intelligence (at the level of humans waiting for instructions) will be surpassed, and an AI will be born that even defines requirements by listening to requests.

In the 2040s, I have a feeling that the job of programmer will probably disappear. I have a feeling that the job of defining requirements and checking for bugs in collaboration with AI will probably remain.

Evolution of Metaverse AI

AI will surely be used in the metaverse, VR, AR, MR, etc. in the mid to late 2020’s.

You will be able to turn any object you like into an AI character on AR, for example, as shown below.

Of course, it should also be possible to create any space you want using natural language processing on the metaverse, as shown below.

The real world should also become a digital twin world, where the real world is simulated on a virtual world, as shown below. The scale of this will be so large that it will be introduced gradually starting in the late 2020s and may go into full swing after 2035.

Self-driving cars

According to the Metaculus forecast below (as of November 2, 2022), the 50% probability that Level 5 automated driving will be commercially available is 2031.

I personally think that this is a rather good estimate, since it is five years after generalized intelligence is developed around 2026.

I have seen some articles that say that automated driving is already wacky, but I have a sense that the development of AI will accelerate in the future.

I think there is a high possibility that around 2030, a level 5 car will be on the market that can achieve a certain level of consensus in society that it is okay to let people drive.

When will these degrees of self-driving car autonomy be developed and commercially available? Metaculus is a community dedicated to generating accurate pre www.metaculus.com

Globally, self-driving cabs have already started Level 4 self-driving services in limited areas for commercial purposes. And in light of the above assumptions, Level 5 self-driving cabs, which do not limit the location of travel, are expected to begin service as early as the 2030s.

Also, the average number of years of use of a car currently on the market was about 10 years, so even if we take a buffer and assume 15 years, we can imagine that even if few people buy self-driving cars in 2030, many people will have bought them by 2045. We can assume that by 2060, 15 years from there, most cars in society will be automatic.

AI accelerates science and technology

As shown in the URL below, there seems to be a Japanese project that aims to build an AI capable of research that could win a Nobel Prize by 2050.

オムロン、AIをノーベル賞めざす研究者に 論文読み自ら仮説 人間の研究者のように、イノベーションの原動力となる画期的成果を生み出せる人工知能(AI)を開発する動きが広がってきた。オム www.nikkei.com

The goal of the above project is to learn from papers (text and images) and formulate new hypotheses in 2027~2030, to be able to submit them to famous scientific journals in 2040, and to win the Nobel Prize in 2050.

Recently, AI developed by companies such as DeepMind has predicted the 3D structure of proteins, found an algorithm to speed up matrix operations in mathematics, and developed Matlantis (quantum simulation acceleration) by PreferredNetworks. There is a succession of AI that accelerates or discovers the laws of science and technology itself.

Although AI has not yet reached the stage where it can read papers from around the world and formulate hypotheses, I believe it will be able to generate new hypotheses in the late 2020s as its natural language processing capabilities improve and research such as the global model progresses.

If general-purpose artificial intelligence is realized, there is a great possibility that it will be scaled up to make a series of scientific discoveries that humans cannot even imagine.

If AGI is realized by around 2040, it is not impossible to achieve the goal of a Nobel Prize in 2050.

There is also a possibility that AI will accelerate science and technology itself and even become self-referential by the 2040s.

The benefits to be gained from this are immeasurable, but if it leans toward the demerits, it could determine not only the risk of human extinction, but also the fate of life in the entire universe.

Future Image Summary

The above is a list of materials that will give you an idea of how generalized intelligence will affect society. All of these technologies seem like something you would see in the world of science fiction, and many of you may find it hard to believe given the current social landscape. Even I don’t really feel it as I introduce them, but it seems to me that all of them will be realized within 10 years (I will explain why in the next chapter).

Even if AGI and superintelligence cannot be developed within a few decades, AI that is practical enough and versatile enough within the next decade will transform all areas of society.

In the next section, I will explain how the following timeline of AI evolution can be derived from generalized intelligence, general-purpose artificial intelligence, and superintelligence, in that order.

- ① ANI (Artificial Narrow Intelligence) – by 2025

- ②GI (Generalized Intelligence), human waiting for instructions or cat level intelligence – realized around 2026-2030

- ③AGI (Artificial General Intelligence) – realized around 2035~2040

- ④ASI (Artificial Super Intelligence), realized around 2038~2045

Generalized Intelligence(GI)

In conclusion, generalized intelligence is very likely to be born roughly by 2026. I will explain in the following order.

- Introduction to the recent acceleration of AI trends

- Definition of generalized intelligence and technologies needed to realize it

- Predictive aggregation site Metaculus

- Report on human-level learning to be reached in 2026

- Summary of estimated time for realization of generalized intelligence

Acceleration of Recent AI Trends

First, before we talk about generalized intelligence, let me summarize the state of the art of AI for those who do not watch AI trends very often.

First, in 2012, a team led by Professor Geoffrey Hinton won an image competition using AlexNet, a convolutional neural network, by a wide margin over second place. From that moment, the deep learning boom began, and October 2022 will mark 10 years.

In that time, AI has surpassed human accuracy in image recognition classification tasks (circa 2015), beaten the best of humanity at Go and Shogi (circa 2017), defeated the world’s best game players at StarCraft 2, an imperfect information game (2019), and is indistinguishable from human-written text GPT-3, an AI that generates sentences indistinguishable from those written by humans, was born (2020).

In 2022, Midjourney, an image generation AI, was released at the end of July, and StableDiffusion was open-sourced in August. At the same time, the number of github accesses to SD has been soaring, and countless image generation AI services have been created.

As of December 2022, not only image generation AI from text instructions (text2img), but also img2img (image editing), text2music (music generation), text2motion (human motion), text2 3D (3D object generation), text 2video (video generation), text2code, text2text (ChatGPT), etc. and other good-looking generative AI technologies are popping up.

While it is the above entertainment/creative technologies that are most likely to be covered by the media, there are many other AI models that will be announced in 2022.

For example, multimodal AI GATO (DeepMind) that can perform all tasks from natural language processing to games to robot manipulation with a single neural network -> could lead to general-purpose robots.

A Generalist Agent Inspired by progress in large-scale language modelling, we ap www.deepmind.com

AlphaTensor (DeepMind) to accelerate computation → could accelerate science and technology

Discovering novel algorithms with AlphaTensor In our paper, published today in Nature, we introduce AlphaTe www.deepmind.com

Algorithm distillation (DeepMind), in which reinforcement learning itself is trained with a Transformer model → can lead to generic task execution for agents

VIMA (NVIDIA), which allows robots to be instructed to operate by language and visual images, could lead to general-purpose robots with more flexible instructions.

VIMA | General Robot Manipulation with Multimodal Prompts vimalabs.github.io

ACT-1 (Adept), which replaces all operations on a PC with a natural language processing UI → Could be a stepping stone for all computers to become natural language processing UIs

ACT-1: Transformer for Actions At Adept, we are building the next frontier of models that ca www.adept.ai

As described above, we are beginning to see remarkable developments in the application of AI technology in all areas, and it seems that the basic technology for the future image described in the previous chapters is already in place.

Part of this remarkable development can be attributed to the maturation of the Transformer model developed in 2017, the explosion of hardware dedicated to AI, and the remarkable evolution of software and hardware, including learning on a lot of data.

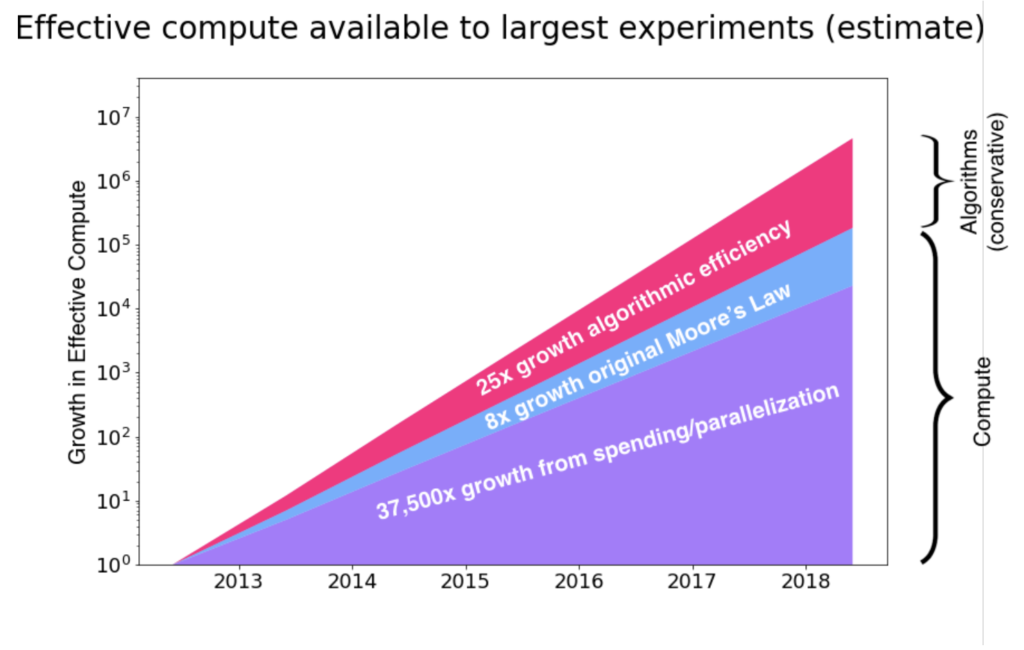

In fact, according to OpenAI’s paper on how much hardware and software evolution has occurred in the AI field, as shown in the figure below, in some areas of hardware-software combined, AI resource expansion and algorithm improvement by 7.5 million times occurred in about 7 years from 2012.

https://openai.com/blog/ai-and-efficiency/

Although it is doubtful that this explosive trend (mainly in hardware) will continue in the future, it seems to me that this is one of the data that can explain the explosive evolution of AI up to now.

Personally, I think that the evolution of hardware will slow down in the future (it has been at an extraordinary level), but the evolution of algorithms will increase computational and data efficiency. I also think that AI will continue to evolve further by compressing and distilling the AI models that have been learned up to now and using them effectively.

What is Generalized Intelligence (GI)?

With the above sense of acceleration of recent AI development, Stability AI CIO Daniel Jeffries believes that what he calls generalized intelligence is feasible in the near future.

It is unclear whether the term Generalized Intelligence is a technical term (it seems to have been coined by Daniel Jeffries), but it is a metaphor for AI that performs incredibly well in a single problem domain or in several domains, cat-level intelligence.

The Coming Age of Generalized AI In this article I break down the most cutting edge, practical medium.com

My personal interpretation is that GI is an AI that has a certain amount of versatility and utility, but has no intrinsic active or curious nature.

However, generalized intelligence is different from the single-task (image recognition, Go AI) and unimodal (large-scale language models, etc.) NAIs that are common in current AI.

It is an AI that can practically perform a variety of tasks across multiple modalities, for example, language, vision, hearing, and robot manipulation.

Although it is a bit of a leap, we can think of generalized intelligence as an AI that has both general-purpose and practical intelligence at the level of a human waiting for instructions without active behavior.

The above article also says that there are two problems with current AI.

- One is that current AI, especially natural language processing AI such as GPT3, speaks only in patterns, and although it can say things like that, it cannot reason logically or with common sense.

- Another problem is that when it tries to learn a new task, it forgets the tasks it learned in the past.

However, there are already signs that these problems are currently being solved, and that AI in the next decade will have such incredible scope and capability that today’s machines will look like early mud huts next to skyscrapers, (unique when the metaphor is translated into Japanese).

For example, the problem of merely conversing in patterns can be addressed by search-based or rule-based AI (using reinforcement learning), and the latter problem can be addressed by Bi-Level Continual Learning/Progress and Compress approaches.

Personally, I am also concerned about the flaws in the logical reasoning of current AI and the lack of common sense reasoning.

I personally feel that the current AI’s deficiencies in logical reasoning and lack of common sense reasoning are being overcome to some extent, depending on the definition.

I think logical thinking can be improved by chain of thought (CoT), majority rule (self-consistency), reinforcement learning induction, fine-tuning, and external knowledge utilization (database, program invocation, physical simulation, etc.).

In the area of common-sense reasoning, multimodal AI (AI that integrates vision, hearing, movement, and language), which has already begun to accelerate research and development, will make it possible for AI to perform common-sense reasoning.

Also, with the recent progress in meta-learning research, there may be a way to efficiently acquire common sense by distilling existing learning models.

In addition, the symbol grounding problem (machines and words cannot understand the meaning of words) and the frame problem (real world has too many things to consider for machines to work), which are traditional AI problems, have been virtually solved by multimodal AI, AI learning elementary physics from images, and logical thinking, as explained in the following articles. I feel that recent improvements have virtually solved this problem.

https://note.com/it_navi/n/n83a5152ae458#F035E1AC-1315-4A2D-8B8E-39E1FB257DB1

Even if AI does not understand this world in an essential sense, I feel that by around 2026 we will almost certainly have some general-purpose, practical AI = generalized intelligence that will solve symbol grounding problems and frame problems (theoretically unknown) at the level of real problems, considering that we are already seeing signs of general purpose, common sense and logical thinking solutions as described above.

Metaculus

Metaculus is an online forecasting platform that started around 2017.

The aggregation engine visualizes the timing of technology realization forecasts in the form of a probability density function. The engine also weights the forecasts of users who perform well, and the more differently a user makes a forecast, the more highly it is rated. There is also a system that awards prizes for forecasting accuracy.

Predicting the future (pinpointing the expectation that this event will occur at this time) is essentially impossible. However, it is possible to make predictions with some range by individually setting up a probability distribution, and in 94% of cases, the results are better guesses than random predictions.

Simply put, since some people are optimistic and some are pessimistic about the timing of the realization of a certain technology, the biases of these people’s information are statistically superimposed (collective knowledge) to estimate a more plausible and realistic forecast timing.

FAQ Metaculus: mapping the future. www.metaculus.com

The Metaculus question, “When will a weak general-purpose AI system (≈generalized intelligence) be realized?” is estimated as of December 14, 2022 to have a 50% chance of being realized in 2027.

When will the first weakly general AI system be devised, tested, and publicly announced? Metaculus is a community dedicated to generating accurate pre www.metaculus.com

This definition of a weak general-purpose AI system includes the ability to pass a text-based Turing test, to perform Atari’s Montezuma’s Revenge game with only visual input and a short learning curve, and to perform reasonably well on the SAT exam with only image input.

The definition of a weak general-purpose AI system also includes the ability to do these things “in a single integrated system, and to be able to explain what it is doing as appropriate.

This may be similar to the definition of generalized intelligence.

The above estimation of when a weak general-purpose AI system would be realized had been estimated at 2047 as a 50% estimate until a year ago (2021/11), but now (December 14, 2022) the estimate has been moved forward by almost 20 years to 2027.

The reason may be due to the recent rapid evolution of natural language processing AI and other multimodal AI such as GATO.

Popular benchmarks in the AI field tend to take less time to reach human scores. GLUE, a language understanding benchmark, was achieved in 9 months, SuperGLUE, a collection of difficult tasks, was achieved in 18 months, although it was said to be impossible for the time being, and BIG-bench reached the average human score before the paper was published. pic.twitter.com/yEUO366gqi— 小猫遊りょう(たかにゃし・りょう) (@jaguring1) July 5, 2022

Also at the end of October 2022, the Flan-PaLM, which was fine-tuned (instruction finetuning) with a generic language model with 1836 tasks, recorded 75.2% in the MMLU, a four-choice question task in 57 genres including mathematics, physics, law, and history, and “as of June 2024 about 75% in MMLU,” which was expected to be reached more than a year and a half earlier.

The forecast was for “about 75% in the MMLU as of June 2024,” but we reached it more than a year and a half earlier than expected.https://t.co/6Sz40oQ1O7— 小猫遊りょう(たかにゃし・りょう) (@jaguring1) October 21, 2022

Since the Metaculus forecast can be moved forward almost 20 years in one year (from 2047 to 2028) depending on the progress of AI, I personally believe that the realization date of a weak general-purpose AI system will eventually be moved forward several years (to around 2026), although it may be up or down.

Human-level intelligence in 2026?

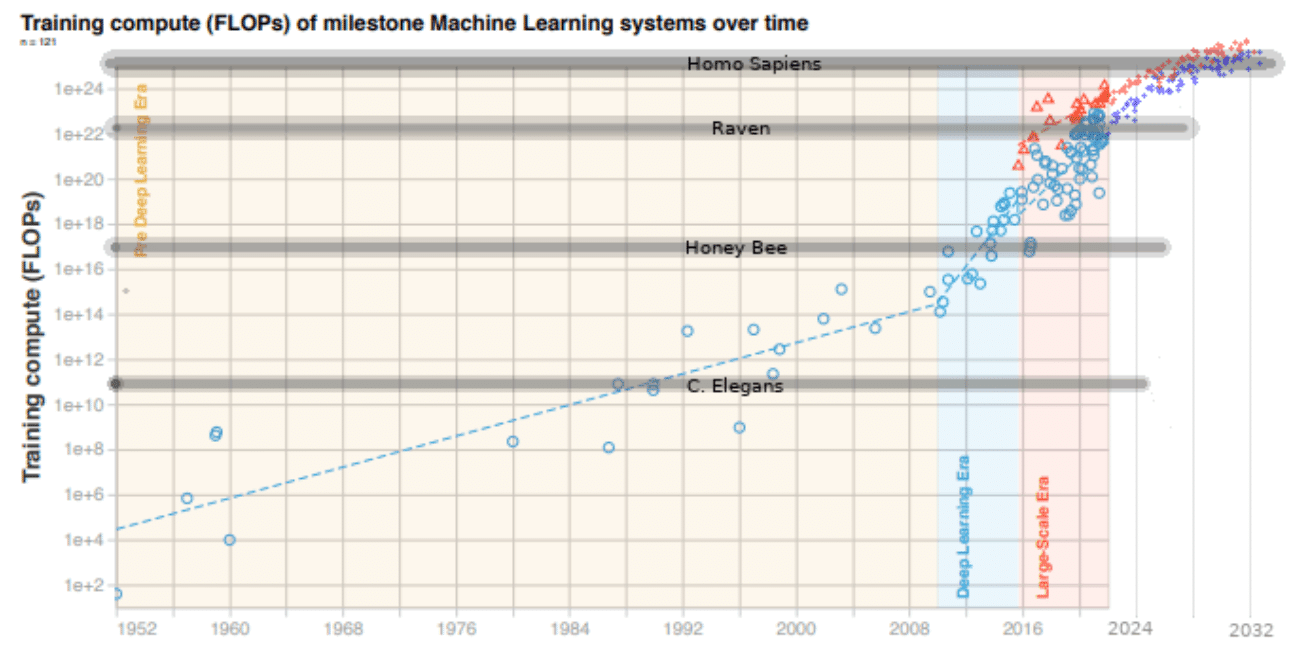

As I mentioned in my tweet below, there is a report that shows a high possibility that current AI models will reach the amount of calculations that humans do in about 30 years by 2026, based on a simple comparison.

This report is based on the bio-anchor hypothesis, which states that when the amount of learning in the brain and the amount of learning in the AI model are the same, the intelligence level will also be the same.

However, I do not think this will immediately lead to the realization of AGI, and personally, I think it will be difficult to achieve AGI within 5 years, while we are still searching for clues that will lead to the current AGI.

However, I feel that it can be used to estimate the timing of the realization of generalized intelligence (GI).

In other words, I personally feel that this document is a useful reference for estimating the time of realization of AI that does not have active involvement in the world, but has some general versatility and utility.

Summary of Estimated Time of Generalized Intelligence Realization

In summary, the recent acceleration of AI trends (improvements in logical thinking, common sense reasoning, and forgetting) suggest that there are not that many fundamental technical challenges to generalized intelligence at this time.

The Metaculus estimation results also estimate a 50% probability that a weak generalized system will be realized by roughly 2027, and I think that everyone feels firsthand that the possibility of realization in the near term (by 2030) is quite high, even among AI experts and amateurs.

Also, taking into account the fact that the prediction of the time of realization of weak general-purpose systems was moved forward by almost 20 years from 2021 to 2022, I feel that there is a possibility that it will be moved forward by another 1~2 years.

If we also take into account the report that AGI will be realized by 2026, with the computational power of large-scale models catching up with the amount of learning that humans have done by the age of 30 (read that as generalized intelligence, since I think AGI is not yet a quirk), it is possible to achieve some general purpose (visual, auditory, movement, language, etc.) by around 2026. I personally believe that general-purpose AI (i.e., capable of performing multimodal tasks such as vision, hearing, movement, and language) and practical AI (i.e., each task has a performance level close to that of humans) will almost certainly be realized by around 2026.

AGI

I have talked about generalized intelligence that has a certain level of generality and utility, but with the acceleration of AI development, there has been a lot of discussion about AGI (Artificial General Intelligence), which is truly human-level intelligence. Here, I would like to consider AGI as an active intelligence that has human-level versatility and practicality and can actively participate in the world.

In conclusion, there are aggressive estimates that consider the time of realization of AGI to be roughly around 2030, intermediate estimates that consider it to be around 2040, slightly conservative estimates that consider it to be around 2060, and some who believe that it will be difficult even during this century.

Personally, I believe it will be realized around 2035~2040. I will explain in the following order.

- Forecasting and Aggregation Site Metaculus

- LessWrong Report

- Proactive Reporting

- Expert Surveys

- Celebrity Predictions

- Brain-referenced AI (supplemental)

- Connectome (personal forecast)

- Summary of AGI realization time

Forecasting and Aggregation Site Metaculus

When will a general-purpose AI system be developed and published? which corresponds to the AGI realization date, the 25% estimated date is 2030, 50% is 2039, and 75% is around 2060 (as of December 14, 2022).

This result depends on the interpretation of probability, but for the 25% estimate period, it means that AGI will be realized within 10 years at the earliest, with a luck as good as tossing a coin twice and getting two heads in a row.

The 50% estimate shows that the probability of AGI realization in approximately 2040 is quite possible.

There is certainly a 13% chance that it will not happen this century, but the important point here is that AI experts and amateurs (presumably the voting public is interested and knowledgeable about AI-related news) are swinging a large, non-negligible probability at the event of AGI being realized in the first half of this century.

ブログ投稿サイトLessWrong

In addition, a comprehensive report (200 pages long) on LessWrong, a website that posts and discusses topics in the natural and social sciences in blog format, estimates the timing of TAI (transformational AI ≈ AGI that will have a significant impact on society) realization. The following is the report, which estimates the 50% probability of TAI realization to be around 2050.

Draft report on AI timelines – LessWrong Hi all, I’ve been working on some AI forecasting research and www.lesswrong.com

The author later significantly accelerated the 50% probability of realization period to 2040, with a 50% probability of realization in 2022.

Two-year update on my personal AI timelines – LessWrong I worked on mydraft report on biological anchors for forecast www.lesswrong.com

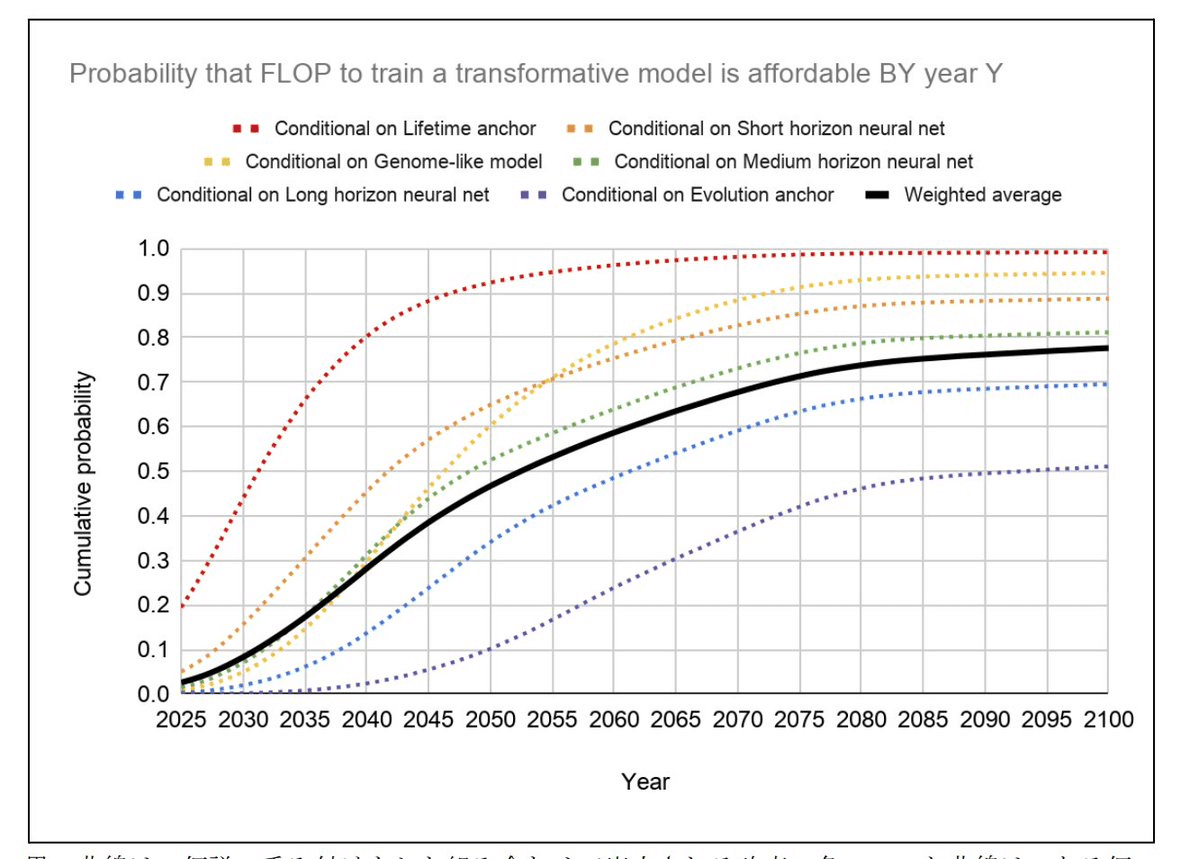

In brief, the above report uses the biological human brain as a reference and discusses when the current AI model will reach the learning level of the human brain and makes the assumption that TAI will be realized when it reaches that level (bioanchor hypothesis).

In more detail, we consider six major hypotheses about the amount of learning of the human brain (neural net anchor (short, medium, and long term), genome anchor, lifetime anchor, and evolutionary anchor) and superimpose each of them with the author’s subjective certainty. The time when the superimposed learning rate is reached is estimated in the form of a cumulative distribution function, taking into account the evolution of hardware, the efficiency of algorithms, and the increasing willingness of companies and countries to spend.

Among these, the neural network anchor considers the amount of learning required if the current AI model (as of 2020) were to achieve the computational speed of the brain. The way to calculate the amount of learning is to first estimate the computation speed of the human brain to a large extent (10^16 FLOPS), estimate the number of parameters of the neural network model that can achieve that computation speed, and from the number of parameters, estimate the amount of learning (number of samples) required for a neural network model with that number of parameters. Estimate the number of parameters of the neural network model that can achieve the calculation speed from there. From there, the time required to train one sample is classified by whether it takes a few seconds (short term), a few days (medium term), or a few years (long term). Finally, the number of learning steps per second of the neural network (10^16 FLOPS) x the number of samples (estimated) x the number of seconds per sample (divided into short-term, medium-term, and long-term cases) yields the amount of training FLOPs.

*FLOPs is an indicator often used as a proxy for the computational cost of a model and indicates the number of floating-point multiplication and addition operations (number of floating-point operations). It is a different index from FLOPS (Floating-point Operations Per Second), which is used as an index of computer processing speed.

Are cost indicators necessarily appropriate? 3 main points ✔️ Discuss the pros and cons of various cost me ai-scholar.tech

The genome anchor assumes that the number of parameters is about the same as the human genome information, and the time required to train one sample is several years.

The Lifetime Anchor does not have talked about the details of the current AI model like the Neural Net Anchor (e.g., if the number of parameters is this much based on the speed of inference, the amount of learning is this much), but calculates the amount of learning purely based on the computation speed of the human brain over a life time of about 30 years. (The most optimistic hypothesis)

The evolutionary anchor requires the amount of learning calculated by the brains of all animals that have ever existed on earth (most conservative hypothesis)

The probability of reaching the amount of learning for each of the six hypotheses can thus be graphed in the form of a cumulative distribution function as shown below.

The average of all six hypotheses is the black line, with the TAI realization period rising to about 10% in 2030, 50% in 2050, and nearly 80% by 2100.

As I said earlier, in 2022, the author has projected a significantly accelerated TAI realization time of 15% by 2030, 35% by 2036, 50% by 2040, and 60% by 2050.

Two-year update on my personal AI timelines – LessWrong I worked on mydraft report on biological anchors for forecast www.lesswrong.com

Optimistic AGI Realization Timing Estimation Report

The above was an introduction to a hypothesis that is neither aggressive nor conservative, but I will also reiterate the aggressive hypothesis (introduced in the Generalized Intelligence section).

The following report posted on LessWrong uses the Lifetime Anchor Hypothesis (the hypothesis that an AI model will develop intelligence similar to humans when it reaches the amount of computation that the brain does from birth to about age 30).

AI Timelines via Cumulative Optimization Power: Less Long, More Short – LessWrong TLDR: We can best predict the future by using simple models w www.lesswrong.com

As shown in the figure below, the author estimates that the current crow-level artificial intelligence has already been realized, and extrapolating the computational progress of the current large-scale language model, he puts the probability of AGI being realized by 2032 at 75%. The author says that AGI could be feasible as early as 2026, which is a prediction that AGI will be realized at a considerable rate.

Expert Questionnaire on AI Progress

Although there are results of various AGI realization dates surveyed, the following are the most recent results for 2022, which seem to have a relatively large number of respondents.

2022 Expert Survey on Progress in AI Collected data and analysis from a large survey of machine le aiimpacts.org

The analysis is based on 738 responses from researchers who presented at the NeurIPS or ICML conferences in 2021, and there were other interesting questions in addition to the AGI realization date.

- The aggregate forecast time to a 50% chance of HLMI was 37 years, i.e. 2059 (not including data from questions about the conceptually similar Full Automation of Labor, which in 2016 received much later estimates). This timeline has become about eight years shorter in the six years since 2016, when the aggregate prediction put 50% probability at 2061, i.e. 45 years out. Note that these estimates are conditional on “human scientific activity continu[ing] without major negative disruption.”

- The median respondent believes the probability that the long-run effect of advanced AI on humanity will be “extremely bad (e.g., human extinction)” is 5%. This is the same as it was in 2016 (though Zhang et al 2022 found 2% in a similar but non-identical question). Many respondents were substantially more concerned: 48% of respondents gave at least 10% chance of an extremely bad outcome. But some much less concerned: 25% put it at 0%.

- The median respondent believes society should prioritize AI safety research “more” than it is currently prioritized. Respondents chose from “much less,” “less,” “about the same,” “more,” and “much more.” 69% of respondents chose “more” or “much more,” up from 49% in 2016.

- The median respondent thinks there is an “about even chance” that a stated argument for an intelligence explosion is broadly correct. 54% of respondents say the likelihood that it is correct is “about even,” “likely,” or “very likely” (corresponding to probability >40%), similar to 51% of respondents in 2016. The median respondent also believes machine intelligence will probably (60%) be “vastly better than humans at all professions” within 30 years of HLMI, and the rate of global technological improvement will probably (80%) dramatically increase (e.g., by a factor of ten) as a result of machine intelligence within 30 years of HLMI.

The results of the HLMI (human-level intelligence) realization date estimates are more conservative than the results of the Metaculus and LessWrong reports (50% estimated date of HLMI realization is around 2060).

Prediction of AGI realization time by prominent figures

Other references to AGI by prominent people are also included for reference.

According to the source below, futurist Ray Kurzweil mentions that by 2029, computers will pass the Turing test and be able to do everything humans can do much better than humans.

Conversations with Maya: Ray Kurzweil – Society for Science Maya Ajmera, President & CEO of Society for Science & www.societyforscience.org

Elon Musk’s statement that he would be surprised if general-purpose artificial intelligence (AGI) is not a reality by 2029 has garnered attention.

2029 feels like a pivotal year. I’d be surprised if we don’t have AGI by then. Hopefully, people on Mars too.— Elon Musk (@elonmusk) May 30, 2022

Holden Karnofsky, CEO of Open Philanthropy, a research and funding foundation based on “effective altruism,” also believes that the likelihood of innovative AI is more than 10% within 15 years (by 2036), about 50% within 40 years (by 2060), and about two-thirds by this century. By the end of this century, it will be about 2/3.

AI Timelines: Where the Arguments, and the “Experts,” Stand What the best available forecasting methods say – and why the www.cold-takes.com

Brain-referenced AI (supplemental)

It is believed worldwide that the development of neuroscience will contribute to the path to truly general-purpose artificial intelligence.

A paper calling for the promotion of neuroscience for the development of artificial intelligence was published in October in the following tweet. The joint names appear to include Yann LeCun, the Godfather of Deep Learning, Yoshua Bengio , and others.

Massive whitepaper just dropped on why neuroscience progress should continue to drive AI progress: https://t.co/SD59JMZybj Argues for an embodied turing test. Needed: real interdisciplinary people, shared platform(s), fundamental research— KordingLab 🦖 (@KordingLab) October 20, 2022

Recently, the Free Energy Principle, a theory of the brain proposed by Karl John Friston around 2006, which he claims could be a unified theory of the brain, has been attracting attention. The theory states that the perception, learning, and behavior of an organism are determined in such a way as to minimize a cost function called free energy.

There has been an increasing movement recently to apply this theory to AI, as discussed by Professor Tetsuya Ogata of Waseda University in the second half of the following lecture on Youtube.

Active Inference in Robotics and Artificial Agents: Survey and Challenges Active inference is a mathematical framework which originated arxiv.org

Other new algorithms titled “brain-based artificial intelligence” have been announced by Kyoto University and Osaka University.

https://t.co/RhOzBMibVKhttps://t.co/OcM62z1j0H

Recently, Kyoto University and Osaka University seem to be devising new algorithms with low power consumption and data efficiency, such as brain-based artificial intelligence and fluctuation learning.

I wonder what is happening in the development of new AI algorithms that can replace deep learning in the world.— bioshok(INFJ) (@bioshok3) October 29, 2022

The godfathers of deep learning, Geoffrey Hinton, Yann Lecun, and Yoshua Bengio, are also putting forth ideas for the next generation of AI, respectively.

・Geoffrey Hinton proposed GLOM and Forward-Forward Algorithm.

・In his paper, A Path Towards Autonomous Machine Intelligence, Yann Lecun proposed an algorithm that uses a world model.

・Yoshua Bengio proposed an algorithm called GFlowNet to realize System 2 in Daniel Kahneman “Fast & Slow”.

GFlowNet Foundations Generative Flow Networks (GFlowNets) have been introduced as arxiv.org

As described above, research on artificial intelligence using the brain as a reference (free energy principle, brain-based artificial intelligence, other-worldly models, GLOM, forward – forward algorithm, GFlowNet, and other novel algorithms) has just begun, but in order to overcome the current artificial intelligence paradigm In order to overcome the current paradigm of artificial intelligence, the world will invest aggressively in research and development in the future.

Connectome (personal forecast)

Here is an analysis of the brain connectome that I use as a direct basis for my belief that AGI will be realized between 2035 and 2040.

(This is not strictly true, of course, but it is one of the supporting lines I used when thinking about the timing of the realization of general-purpose artificial intelligence.)

The following Harvard study attempts to map the connections (connectome) between neurons in the brain, rendering the three-dimensional structure of 50,000 cells (presumed neurons).

A connectomic study of a petascale fragment of human cerebral cortex We acquired a rapidly preserved human surgical sample from th www.biorxiv.org

Metaculus also has about a 60% prediction that by the end of the decade, 1,000 times more connectomes (exabytes instead of today’s petabytes) will be mapped and published.

Will a exascale volume of connectome be mapped and revealed to the public by June 2031? Metaculus is a community dedicated to generating accurate pre www.metaculus.com

Assuming that mapping of 50,000 neurons is completed in 2022 and that mapping can be increased 1000-fold every 10 years, mapping of 100 billion neurons will be completed by about 2040. This is about the number of neurons in the entire human brain.

I also personally feel that the realization of AGI does not require information on the structural level of the connectome of the entire human brain. Therefore, assuming that the time when 10% of the connectome structure of the entire human brain is clarified is the time when the principle of AGI is also clarified, the principle of AGI may be clarified by the mid-2030s.

Summary of AGI realization time

The above AI names (AGI, HLMI, TAI, etc.) are all roughly the same, and can be summarized as follows, ranging from aggressive to conservative predictions of when AGI will be realized.

- LessWrong optimistic guess report -> 2026 to 2032

- Mr. Ray Kurzweil -> 2029

- Mr. Elon Musk -> 2029

- Metaculus (collective knowledge forecast) → around 2040

- LessWrong comprehensive report -> circa 2040 (as of 2022 the author has accelerated his prediction of circa 2050 as of 2020)

- Expert Questionnaire, Holden Karnofsky -> circa 2060

Based on the above results, it appears that the aggressive estimate is around 2030, the slightly conservative estimate is around 2060, and the intermediate estimate is around 2040.

Personally, while taking into account the above results, I am slightly optimistic that AI that is general-purpose and active (curious and questioning about the world) to the human level will be realized between 2035 and 2040.

From the brain-referenced AI side, I personally feel that AGI will be realized around 2035~2040 if breakthroughs in the fields of free energy principles, brain-based artificial intelligence, and brain neuroscience occur in the mid-2030s (extrapolation of connectome decoding) and are implemented within a few years.

Super Intelligence

I have talked about generalized intelligence and AGI, and the last one is the estimation of when superintelligence will be realized. The conclusion is that superintelligence depends on the time of AGI realization, and if AGI is developed between 2035 and 2040, superintelligence will be realized between 2038 and 2045. I will explain in the following order.

- Definition of Superintelligence and the Intelligence Explosion

- Time span from AGI to superintelligence

- AI Alignment and Risk of Human Existence (Aside)

- Summary of Superintelligence Realization Timeframe

Definition of Superintelligence and the Intelligence Explosion

Oxford University professor of philosophy Nick Bostrom defines superintelligence as “an intelligence that is much smarter than the best human brains in virtually all fields (including scientific creativity, general knowledge, and social skills).

How long before superintelligence? Considers some arguments for thinking that superhuman artific nickbostrom.com

Recently, the Japanese anime “Beatless” and “Vivy,” the movie “Transcendence,” the manga “AI no idenshi,” and the novel “Self-Reference ENGINE” by Japanese novelist Tou Enjou depict a machine intelligence with intelligence far beyond that of humans and mankind. Super intelligence is science fiction, right?

Many of you may be thinking, “Isn’t superintelligence science fiction? Many people may think so, but Nick Bostrom has examined the possibility that if AGI were to be realized, it could develop into superintelligence not in decades, but perhaps in years, or even minutes if it were to be faster than imagined.

This is mainly because compared to humans, digital intelligence can easily increase its storage capacity and computing speed.

Once a human-level intelligence is created, it may be possible to easily increase its memory or computational speed by many orders of magnitude by extending the hardware and data resources (e.g., by feeding it information from the Internet) without algorithmic improvements.

It also considers the possibility of an intelligence explosion in which autonomous self-improvement occurs and humanity recursively improves its capabilities rapidly without external hardware enhancements, data resource expansions, or software improvements.

(Super Intelligence by Nick Bostrom, Chapter 4: The Speed of the Intelligence Explosion )

Time span from AGI to superintelligence

According to the Metaculus projections below, as of December 14, 2022, once AGI is developed, it is projected at 50% that it will be superintelligent in about 12 months, and at 75% it will be 70 months.

After an AGI is created, how many months will it be before the first superintelligence? Metaculus is a community dedicated to generating accurate pre www.metaculus.com

So, based on the above Metaculus prediction, if AGI is realized around 2040, there is a possibility that super intelligence will be developed around 2041~2046.

In addition, according to the results of the expert survey introduced below, there is a 60% probability that intelligence that overwhelms humans in all occupations will emerge within 30 years of the creation of HLMI (human level intelligence), and if we look conservatively, super intelligence may exist by 2040+30, or by 2070. Also, even if we take a very conservative view of the HLMI realization period as around 2060, which is the 50% prediction of the expert survey results, there is a possibility that super intelligence will be realized by 2090.

54% of respondents voted “almost certain, certain, or half likely” for the possibility of an intelligence explosion that will speed up research and development of AI systems by an order of magnitude or more in less than 5 years.

With that in mind, I personally think that the 60% result for the probability of superintelligence within 30 years of the above HLMI realization is a realization span estimate that is too late.

Aside from that, I would say that at least half of AI experts believe that an intelligence explosion is quite possible.

AI Alignment and Risk of Human Existence (Aside)

Research aimed at bringing AI systems closer to the designer’s intended goals and interests is called AI alignment.

Currently, there is significant investment in research to improve the performance of AI or to curb the discriminatory remarks of the most recent AI or to improve the safety of automated driving.

On the other hand, the number of researchers to reduce the risk of human existence due to advanced AI is still low, estimated to be several hundred as of 2022, according to the report below. It is assumed that the demand for reducing the safety risk of AI will increase rapidly in the future, and the number of researchers is expected to increase at a rate of 10~30% annually (estimated to be 1000 by 2030).

Estimating the Current and Future Number of AI Safety Researchers – LessWrong SUMMARY I estimate that there are about 200 full-time technic www.lesswrong.com

With AI rapidly becoming more practical, AI research organizations such as DeepMind and OpenAI are beginning to see the importance of research to align AI with human intentions and ethical standards. This year, the following considerations have been issued by both of them in relation to AI alignment issues.

Our approach to alignment research Our approach to aligning AGI is empirical and iterative. We a openai.com

How do we assess the moral capacities of artificial systems? One novel approach applies insights from developmental psychology to the grounded study of morality in AI.

Work by @weidingerlaura, @mgreinecke, and Julia Haas: https://t.co/m9H1Fxd6YC pic.twitter.com/3NIiZB7WSL— DeepMind (@DeepMind) August 24, 2022

Thus, as AI becomes more practical and the possibility of general-purpose artificial intelligence increases, there seems to be a gradual but growing discussion about how to reduce the risk of fatal damage to humanity that inevitably occurs when AI ignores human intentions.

The following LessWrong discussion and paper also estimates the probability of catastrophic damage to humanity at approximately 10%, assuming the birth of superintelligence.

How Do AI Timelines Affect Existential Risk? – LessWrong This report is my MLSS final project. • PDF version here. … www.lesswrong.com

Below is a report from a website that discusses AI alignment professionally that warns that methods like reinforcement learning with current human feedback will increase the risk of fatal damage to humanity from superintelligence this year.

The idea behind this seems to lie in Nick Bostrom’s “orthogonal hypothesis” of intelligence and values. Simply put, no matter how smart intelligence may exist, it may have the value of filling the universe with paper clips, in which case humanity may be treated like an anthill that is ignored when building a dam.

(Superintelligence by Nick Bostrom, Chapters 7 and 8)

Some non-profit organizations have emerged to provide career support to reduce such risks to human existence.

These organizations provide research and support to help people turn to careers that effectively address the world’s most pressing issues.

The reference URL is placed below. The site has a wide range of discussions from the feasibility of AGI to the risks of super-intelligence, and the content is very comprehensive, so if you are interested, please take a look.

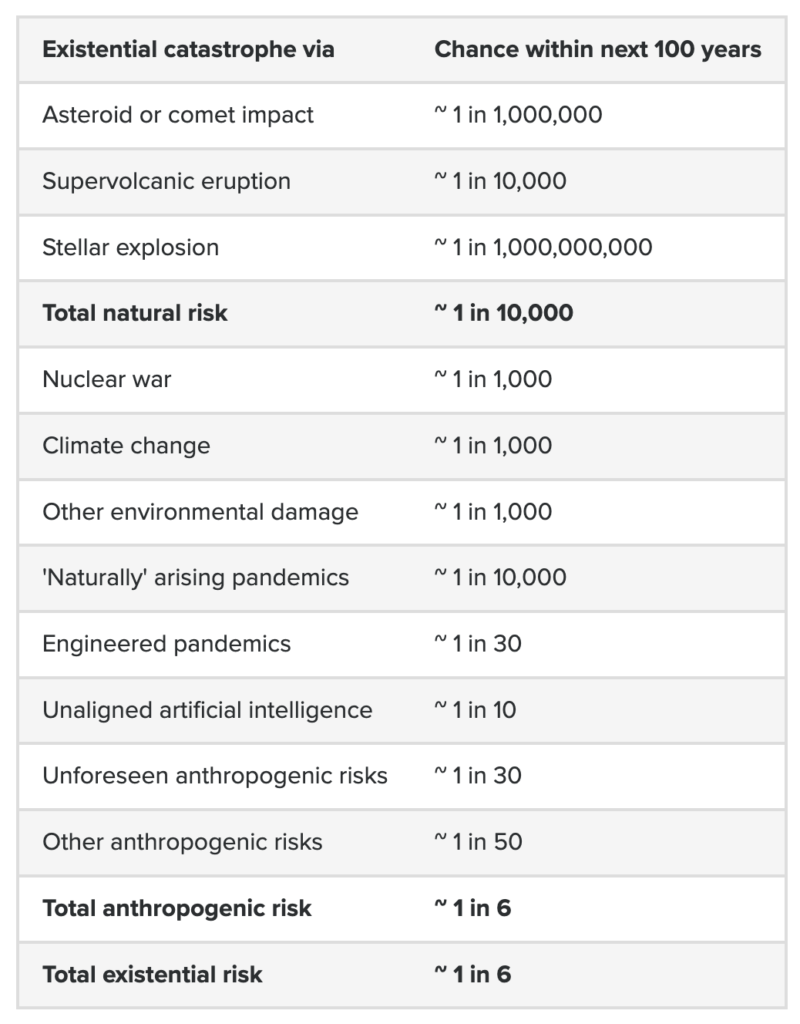

In addition, the last part of Chapter 4 of the above website discusses the general risk of extinction of mankind, not just AI. According to philosopher Toby Ord, as shown in the table below, the probability of human catastrophe within 100 years is quite large at 1/6. Of that, 60% is the risk of extinction due to AI. (Simple calculation shows that without the influence of AI, the risk of human extinction is about 6~7%, and the influence of AI is about 10%.)

As the likelihood of AGI and superintelligence becoming a reality increases in the next decade, the discussion of catastrophic risks to humanity from superintelligence and general-purpose artificial intelligence, such as those described above, will probably come into full swing.

Personally, I feel that the Metaculus prediction is a little too early (12 months) and the results of the expert survey (within 30 years) are too late as a time span between the birth of general artificial intelligence and the realization of super intelligence.

It is true that once AGI is realized, it may evolve to superintelligence by investing resources without a pause, but taking into account the too large risk of a 10% probability of human extinction, it is likely that it will take 3~5 years to shift to superintelligence slowly and deliberately.

Also, I personally feel that 30 years is too late for the results of the expert survey.

Here is why.

Computational processing of the human brain is estimated to be about 10^15 FLOPS.

How Much Computational Power Does It Take to Match the Human Brain? – Open Philanthropy Open Philanthropy is interested in when AI systems will be ab www.openphilanthropy.org

Assuming 10^16 FLOPS as a margin, and assuming supercomputer performance of 10^21 FLOPS around 2040, a simple calculation can be made that the equivalent of about 1 million people can be computationally processed.

It is a subjective feeling that it is unlikely that resources will not be expanded to a scale where superintelligence can be born for more than 10 years in that state.

(We have historically improved supercomputer performance by a factor of 100 in 10 years, but we have assumed a factor of 1000 to account for the fact that we will be approaching the limits of miniaturization in the future. Current supercomputers are about 10^18 FLOPS, so 10^21 FLOPS. (Perhaps the order of magnitude could be even higher with a leap in neurochips, etc.)

So, in conclusion, I think that within +3 to 10 years to the age when general-purpose artificial intelligence is realized is a reasonable estimate of the time when superintelligence will be realized. Perhaps it could be earlier, or some organization could scale it to superintelligence in a few months. However, it seems more likely that evolution in a short period of time, such as a few months or days, is more likely than the probability of a time span of 10 years or more. (Nick Bostrom seems to think the same way.)

Personally, I think it depends on the conditions: if AGI is realized in 2040, it will be within 5 years; if AGI is realized in 2035, superintelligence will be realized within 10 years. This is because I believe that AI safety research will progress during those five years, and motivation to promote the development of superintelligence will increase.

In other words, if we assume 2035~2040 as the time of realization of general artificial intelligence, we can expect super intelligence to exist in this world by 2038~2045, and if we assume 2060 as the time of AGI realization, we can expect super intelligence to exist by 2065.

However, if computational resources are already being used at the supercomputer level to realize AGI, or if software improvements do not make any progress, there is a possibility that superintelligence will not be born for a long period of time (several decades), so uncertainties remain.

However, it is important to note that there is a non-negligible probability that superintelligence will be born in the early part of this century. This will be a topic for further social discussion when generalized intelligence begins to permeate society (late 2020s to 2030s).

Social impact

We have estimated the timing of realization of generalized intelligence, AGI, and superintelligence, in that order. Next, I would like to consider the impact that the realization of these technologies will have on society as a whole. I will explain in the following order.

- Hardware Improvements

- Economic Impact

- Political Impact

Hardware Improvements

No matter how smart the software becomes, one of the conditions for its widespread use in society will be the availability of AI software on affordable hardware.

While 5G and 6G technologies may help with computation from the cloud side, hardware improvements should accelerate the spread.

The above video discusses how smart superintelligence can be in principle, and what the constraints are.

The entire video is very interesting, but at around the 54:20 mark, Mr. Takahashi of RIKEN predicts that anyone will be able to have 10^16 FLOPS of computational processing power of the brain on a mobile device by around 2030.

For example, the following article states that neuromorphic chips can perform certain workloads 1,000 times faster than conventional processors and are 10,000 times more efficient.

Intel、1億ニューロンを組み込んだ脳型システムを発表 Intelは2020年3月18日(米国時間)、約1億ニューロンの演算能力を備えた新しいニューロモーフィックコンピューティン eetimes.itmedia.co.jp

Also, NVIDIA is currently (planning to) release a small AI computer that can do 10^13-14 AI calculations. (about $200~$2000)

【速報】NVIDIA「Jetson Orin Nano」を発表 従来Nanoの約80倍の高性能 超小型AIコンピュータ Orinファミリーは3機種に – ロボスタ ロボスタ – ロボット情報WEBマガジン【速報】NVIDIA「Jetson Orin Nano」を発表 従来Nanoの約80倍の高性能 超小型AIコンピュータ Orinファミリーは3機種に – ロボスタ シェア 98 ツイート 0 はてブ 42 NVIDIAは「GTC 2022」において、CEOのジェンスン・フアン氏が基調講 robotstart.info

Also, the latest AI-only chip for the 2022 iPhone is likely to be around 10^13 FLOPS.

Apple A15 – Wikipedia en.wikipedia.org

Therefore, we can conservatively estimate that by 2030, individuals will be able to buy about 10^15 FLOPS of computing speed.

Since the computation speed of the human brain is estimated to be about 10^15 FLOPS here, it is natural to assume that many people will be able to own one moderately smart AI by 2035 with a little leeway.

Economic impact

What level of economic impact is expected when generalized intelligence or AGI such as we have seen so far is released to the world? We would like to look at the following two documents.

First, according to a report estimating the economic impact of AI over the most recent decade The future economic value created by AI from 2022 to 2030 will be six times the economic value created by the Internet from 1998 to 2021.

Productivity Gains Could Propel The AI Software Market To $14 Trillion By 2030 – ARK Invest Original research on investing in disruptive innovation and b ark-invest.com

This does not necessarily mean that this will happen, but it is not impossible given the potential for multimodal AI to rapidly reshape all aspects of society.

The following report examines the economic impact over a long-term, multi-decade span.

Could Advanced AI Drive Explosive Economic Growth? – Open Philanthropy This report evaluates the likelihood of ‘explosive growth’, m www.openphilanthropy.org

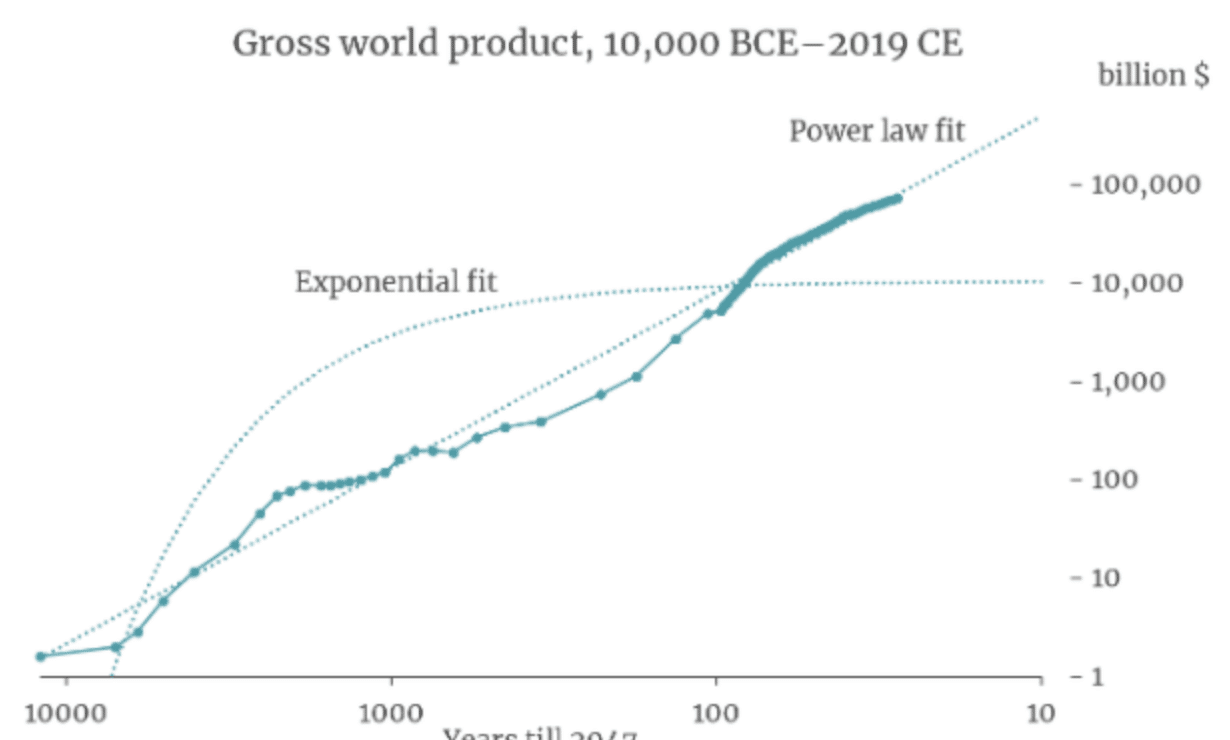

To summarize the report, the long-term trend in GWP (Gross World Product) growth rates reveals the following power-law relationship.

It took thousands of years to grow from 0.03% to 0.3%, but it took only a few hundred years to grow from 0.3% to 3%, and if this trend continues, we can extrapolate that it will only take a few decades to go from 3% to 30%.

The above report states, “The probability of explosive growth occurring during this century is more than 10%. This assumes that there is a 30% or greater probability that a sufficiently advanced AI will be developed in time, and a 1/3 or greater probability that explosive growth will actually occur, provided that this level of AI is developed.” He concludes.

Since the authors define advanced AI here as AI that can do everything that the highest quality workers can do remotely, it seems fair to say that the development of technology above AGI is a prerequisite.

Assuming that AGI could be realized by 2040, there is roughly a 30% chance that the GWP could increase by 30% each year.

Of course, this will not always be the case, but it is important to note that global economic growth rates could grow from a few percent to several tens of percent.

Political Impact

What are the political ramifications of the future development of general-purpose artificial intelligence beyond generalized intelligence?

Chapter 4 of the following article, which I mentioned earlier, talks about the worsening of wars, totalitarianism, and other unintentional AI uncontrollability.

On a more practical level, there are also concerns about privacy violations, technological unemployment, and increased security risks, as described in the article below.

https://www.openphilanthropy.org/research/potential-risks-from-advanced-artificial-intelligence/

Countermeasures (AI governance) against the negative social impacts of such AI systems are being implemented at various scales, from the strategic to the tactical level, as well as in dissemination activities. The following article is a good reference as it summarizes which organizations are doing what.

Below is an example of an organization that engages in AI governance as described in the above article.

FHI、GovAI、CSER、DeepMind、OpenAI、GCRI、CSET、Rethink Priorities、LPP

AI governance is the formulation of local and global norms, policies, laws, processes, politics, and institutions (not just governments) that affect social outcomes from the development and deployment of AI systems (see article above).

A time of great change in the world

I have introduced various discussions that specifically estimate the evolution of AI and its impact on society. The future that is almost certain is that AI at the level of generalized intelligence will change all areas of society from the late 2020s onward.

As for AGI and super intelligence beyond that, I personally think that AGI will be realized around 2035~2040 → super intelligence will emerge by 2038~2045, but I cannot say for sure due to many uncertainties.

However, it is believed that at least various experts feel that there is a high possibility of the realization of general-purpose artificial intelligence by the end of this century.

(Of course, there are those who believe that general-purpose artificial intelligence will still be impossible 100 years from now.)

) Still, if my sense is correct and AGI is realized around 2040 and super intelligence is realized around 2045, then society will really be plunged into a world that is not even metaphorical but overwhelmingly science fiction-like. All social institutions will have to change.

I think even the definition of what a human being is will be blurred by cyborgization and mind uploading technology. All labor may disappear, all experiences may come through brain-machine interfaces, AI human rights issues will become acute, and the philosophical question of what consciousness is will become critical.

And the possibility that many people may even become immortal (an extension of the status quo that may be difficult to achieve, but with the acceleration of superintelligence science and technology) cannot be dismissed.

This is something that many of you may experience in your lifetime.

Many people feel that in 2022, there will still be paper, and that we will not even be in a digital age, let alone an AI age. And most people’s impression of AI is that it can recognize images, speak a few words, and recently there has been an amazing image-generating AI, but that’s about it. I think most people think that nothing will change in the world.

However, as I have been discussing, the world is really going to change amazingly in the next 4 to 10 years, i.e., from the late 2020s to the 2030s, and the world will become like science fiction.

I believe that we will see a rapid change in society at a level never experienced before by mankind (even if AGI is not possible).

To use an analogy, it would be a change from the world of 100 years ago, when there were no smart phones, internet, or TV, to the world of 2022, where we are now.

I am not sure whether such a rapid change is a good thing or a bad thing, but I am almost certain that it will come within the next 10 years, and I have written this article to make many people aware of this fact.

In the next section, I will post a list of websites that I referred to when writing this article. If you are interested in them, please visit them.

Thank you for reading this long article.

If you have any questions or comments, please feel free to contact us via Twitter.

@bioshok3

Content links related to the future of AI (reference)

I thought the entire site below and the links at the end of it were useful and I will post them.

It included many organizations and overviews of the many organizations involved in AI safety research.

https://80000hours.org/problem-profiles/artificial-intelligence/#neglectedness

The following other sites were also useful

https://aiimpacts.org/

https://www.cold-takes.com/

https://80000hours.org/problem-profiles/artificial-intelligence/#complementary-yet-crucial-roles

https://www.openphilanthropy.org/

https://www.metaculus.com/help/faq/#whatismetaculus

https://www.lesswrong.com/

https://www.alignmentforum.org/

トークをします-1-320x180.png)